Source: EEWeb

This is part 3 of a 3-part series called, Hardware Emulation: Realizing its Potential. The series looks at the progression of hardware emulation from the early days to far into the new millennium. Part 1 covered the first decade from inception in ~1986 to about ~2000 when the technology found limited use. Part 2 traced changes to hardware emulation as it became the hub of system-on-chip (SoC) design verification methodology. Part 3 envisions what to expect from hardware emulation in the future given the dramatic changes in the semiconductor and electronic system design process.

Introduction

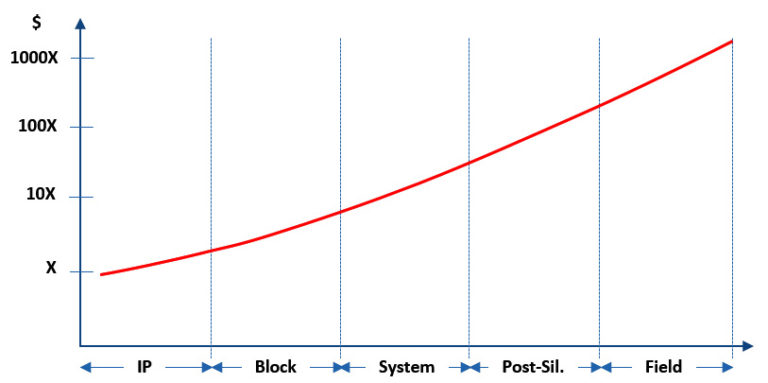

The reality of testing any electronic circuit or device is that the cost of detecting a bug increases about ten-fold at every following stage in the verification flow, from intellectual property (IP) to block, sub-system to system level to post-silicon and finally in the field. Therefore, the sooner a bug is found, the less expensive it is to fix (Figure 1).

Figure 1: The cost of bug fixing in the verification flow as charted on a logarithmic scale. Source: System Semantics

To improve the verification return on investment (ROI), it is important to debug a design as much as possible in the early stages and move to the next stage after achieving a high degree of coverage. This is at the core of the “shift-left” trend in the verification flow.

Of all the verification tools in the toolbox, hardware emulation is the perfect fit for the task. Only emulation can meet the challenge and unearth hardware and software bugs when they can be traced to the interaction between the two domains.

Market Segmentation and Verification Needs

Over the past three decades, the design verification landscape has changed dramatically. Today’s designs, from simple Internet of Things (IoT) edge devices to the most complex processor and networking SoC chips, include embedded software –– operating systems, drivers, apps and diagnostics, and possibly test software. Verification of such embedded designs is daunting, and it needs to be thoughtfully and carefully planned ahead of execution at all stages of the verification flow.

To start with, a complex SoC design or, even more challenging, a system-of-systems (SoS) design made up of multiple SoC designs may reach a size of well over one-billion gates, and possibly several billion gates. No software-based verification tool can handle such design sizes. Even commercial field programmable gate array (FPGA)-based prototyping platforms do not support this level of complexity. Only in-house custom FPGA-based prototyping systems can be designed for this capacity.

The same design may require several tera cycles of verification for thorough hardware verification, hardware and software integration, embedded software validation, and finally, system validation prior to tape-out. That equates to 10^12 or a million of a million cycles. At a megahertz speed, an emulation platform would have to run for 11 days 24/7 to cover that mileage.

As important as clock speed is, if the bandwidth of I/O traffic in and out of the design under test (DUT) is not adequate, its testing would be painfully slow. The carrying capacity of the automotive world serves as a good analogy. A one-passenger sports car running four times faster than a 50-passenger bus would take 25 times the amount of time for the bus to carry all 50 passengers to the same destination. Different emulation platforms have different clock speeds and different levels of I/O bandwidth. The difference in clock speed is at most a few megahertz, but wide gaps separate bandwidths. Not only that, the bandwidth in in-circuit-emulation (ICE) mode is different, and can be dramatically different from the bandwidth in co-modeling based on a transactional interface.

Design capacity, clock speed, and I/O bandwidth are three stringent requirements that rule out most verification tools except for hardware emulation. While FPGA-based prototyping systems would be best for an ICE application since they can achieve tens of megahertz, they may not support a virtual environment and do not accommodate one-billion gate capacity.

Let’s review the verification needs of the most complex designs of today and what to expect in the next five years or so.

Networking

Networking SoCs such as Ethernet switches or routers have replaced processors and graphics chips as the most complex chip designs. Today, their sizes may reach into the billion application specific integrated circuit (ASIC)-equivalent gates and will continue to grow in the next few years.

The reason for the massive size and extreme complexity is the large number of Ethernet ports soon passing 1,000, amplified throughput up to 400Gbps, sub-microsecond latency. Improved redundancy and resiliency to minimize performance degradation from network congestion, failures, and resource exhaustion during maximum utilization are considerations also.

Verification of these designs calls for the best emulation system on the market. Hardware emulation can manage Ethernet traffic in and out of the DUT at more than one-million packets per minute in a 1-TB Ethernet switch. By comparison, a hardware description language (HDL) simulator may max out at about 1,000 packets per day.

While the ICE mode works with real traffic, a plus for this application, the large number of ports makes it burdensome and impractical. ICE does not work well in a data center. Instead, a virtual Ethernet tester may be the most effective approach. Here, large I/O bandwidth in virtual mode is essential.

Storage

Despite heroic attempts by the traditional Hard-Disk Drive (HDD) manufactures to compete and slow the momentum of the Solid-State Drive (SSD), the latter already dominates the market.

In comparison to a networking SoC, an SSD controller is significantly smaller, maybe reaching 100-million gates. Yet, it poses unique verification challenges.

In an SSD controller, the embedded firmware determines its major features. To obtain the best performance and lowest power consumption, the firmware must be tuned on optimized hardware and its development must proceed in parallel from the start. Firmware engineers participate in spec definitions of the hardware and gain intimate knowledge of the hardware details during the hardware design phase. Testing early firmware on the hardware in development unearths bugs that otherwise would be found after tapeout. Likewise, performance optimization can occur early on.

Hardware emulation can do the job, particularly in virtual mode. In ICE mode, the frequency ratios of the DUT and the peripherals are altered since the DUT must be slowed down while the peripherals run at speed. In virtual mode, frequency ratios are preserved since the DUT and peripherals are models and can be set to run at any frequency. This avoids discrepancies due to different speed ratios and enables realistic performance evaluations.

Mobile

The mobile industry is on the verge of a revolution triggered by the upcoming 5G standard.

The current radio-frequency spectrum in the wireless world is rapidly becoming inadequate to support the volume of data traffic required for 8K UHD streaming video and the new world of connected cars, drones and robots. As a data point, Cisco Systems estimates there will be 50-billion connected devices globally by 2020 and approximately one-quarter of them will be cars, aerial drones, industrial robots and other types of machines.

5G tackles the challenge by adopting millimeter waves (10-1 mm) in the 30-300Ghz frequency range. Unfortunately, millimeter waves suffer one major drawback: They can’t easily travel through buildings or obstacles and can be absorbed by rain and trees and foliage. To overcome the drawback and support all previous standards (LTE, 4G, 3G, 2G) in the same design will make 5G chip development extremely complex. The overwhelming scenario will require plenty of processing power and a lot of design flexibility.

Design verification in this environment will be demanding. The inherent processing power of emulation augmented by recent emulation apps such as low-power verification and estimation, embedded software validation, design for test (DFT) testing and others to come, promises to tame the complexity.

Processors

The processor market is in a state of flux. The venerable Complex Instruction Set Computing (CISC) ix86 architecture continues to dominate the PC and CPU enterprise markets through Intel and, to a lesser extent, AMD. The alternative RISC Reduced Instruction Set Computing (RISC) approach touted by Arm is ubiquitous in the embedded processor market. A new entrant, the RISC-V open instruction set architecture is fast gaining attention and becoming the preferred choice for IoT edge devices. To complicate the scenario, the emerging neural networking computing implemented via FPGAs and GPUs is changing the dynamics in the processor market.

Hardware emulation was conceived three decades ago to verify processor and graphics designs, the largest designs of the time. Today’s processors may not be the largest designs any longer, though the Intel class of CPUs are still among the biggest, but they benefit from hardware emulation to increase design productivity, improve design quality, and accelerate time to market. This trend will continue in the future.

Automotive

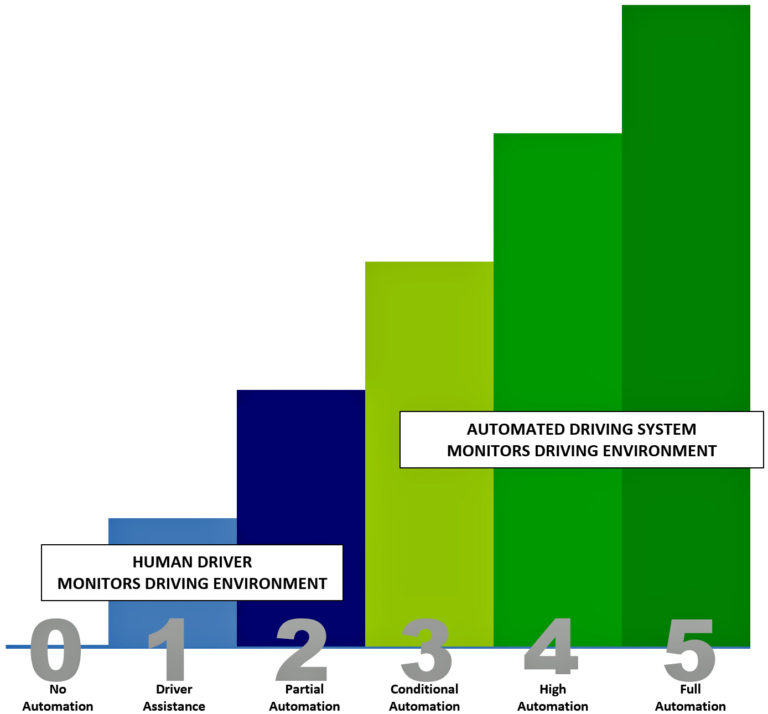

Codified by the Society of Automobile Engineers (SAE) International, the road to self-driving cars or autonomous vehicles drive through six stages, zero being no automation to five equating full automation (Figure 2). Currently, the industry is approaching stage 3.

Figure 2: According to SAE International, there are six levels of autonomous driving. Source: Society of Automobile Engineers (SAE) International

Design challenges at each stage increase by at least one order of magnitude compared to the previous stage. For example, a BMW sedan at CES 2018 taxied visitors from the hotels to the conference. It was outfitted with 10 radar units, nine Light Detection And Ranging (LIDAR) units that bounced lasers off the surroundings to determine its location and sense obstacles, and two cameras, plus a multitude of electronics.

In this scenario, hardware emulation becomes mandatory and its deployment will only increase as the design challenges move to the next stages.

Internet of Things (IoT)

Growth projections of the IoT market vary widely. Even at the low-end, it is staggering.

From a collection of IoT forecasts and market estimates, the global IoT market will grow from $157 billion in 2016 to $457 billion by 2020, with a Compound Annual Growth Rate (CAGR) of 28.5%. According to Growth Enabler & Markets and Markets analysis, the global IoT market share will be dominated by three sub-sectors: Smart Cities (26%), Industrial IoT (24%) and Connected Health (20%). Gartner approximated 8.4 billion connected “Things” were in use in 2017, up 31% from 2016. In 2017, total spending on endpoints and services will approach $2 trillion. In 2017, businesses drove an estimated $964 billion in global spending, and consumer applications amounted to $725 billion. By 2020, hardware spending from both segments will reach almost $3 trillion (Table 1).

| Table 1: The IoT endpoint spending by category shows enormous growth (in millions of dollars) between 2016 and 2020. (Source: Gartner (January 2017)) | ||||

| Category | 2016 | 2017 | 2018 | 2020 |

| Consumer | 532,515 | 725,696 | 985,348 | 1,494,466 |

| Business Cross-Industry | 212,069 | 280,059 | 372,989 | 567,659 |

| Business: Vertical-Specific | 634,921 | 683,817 | 736,543 | 863,662 |

| Grand Total | 1,379,505 | 1,689,572 | 2,094,881 | 2,925,787 |

These estimates clearly indicate that IoT is the new frontier of the semiconductor industry. Regardless of the application, all IoT designs are embedded systems encompassing multicore processors, memory, and many peripherals. Their verification will be challenging and hardware emulation will be at the center stage in this revolution.

Conclusion

Three decades after its introduction, hardware emulation now is the preferred choice for design debugging. It is powerful and flexible, provides simulation-like design visibility and scales with the increasing capacity of modern and future chips. It satisfies all the verification objectives of a SoC design regardless of its type and application, from hardware verification and hardware/software integration to embedded software and system validation, including post-silicon validation.

About Lauro Rizzatti (Verification Consultant, Rizzatti LLC)

Dr. Lauro Rizzatti is a verification consultant and industry expert on hardware emulation. Previously, Dr. Rizzatti held positions in management, product marketing, technical marketing and engineering. (www.rizzatti.com)

About Jean-Marie Brunet (Director of Marketing, Mentor Emulation Division, Mentor, a Siemens Business)

Jean-Marie Brunet is the senior marketing director for the Emulation Division at Mentor, a Siemens business. He has served for over 20 years in application engineering, marketing and management roles in the EDA industry, and has held IC design and design management positions at STMicroelectronics, Cadence, and Micron among others. Brunet holds a Master of Science degree in Electrical Engineering from I.S.E.N Electronic Engineering School in Lille, France. (www.mentor.com)

France. (www.mentor.com)