Source: EEWeb

By: Lauro Rizzatti and Jean-Marie Brunet

The series looks at the progression of hardware emulation from the early days to far into the new millennium. Part 1 covered the first decade from inception in ~1986 to about ~2000 when the technology found limited use. Part 2 traces changes to hardware emulation as it became the hub of system-on-chip (SoC) design verification methodology. Part 3 envisions what to expect from hardware emulation in the future given the dramatic changes in the semiconductor and electronic system design process.

Introduction

Approaching the new millennium, electronic design automation (EDA) vendors saw a big market opportunity for hardware emulation. Application specific integrated circuit (ASIC) designs became more complex and a new class of designs called System on Chip (SoC) started to replace traditional ASIC designs. SoC designs included significant embedded software: operating systems, drivers, applications, diagnostics and even test software. These combined made register transfer level (RTL) simulation insufficient.

Hardware emulation suppliers reached a consensus that architectures based on commercial field programmable gate arrays (FPGAs) were inadequate. It could not be reworked to eliminate its inherent drawbacks, primarily poor visibility into the design-under-test (DUT), and also long setup time and slow compilation speed.

Innovations came from a few pioneering startups that experimented with new ideas and new technologies.

New Design Approaches

Two different approaches based on custom silicon were at the foundation of two competing emulation architectures, both promising to remove the pitfalls of the old class of emulators.

One of them made use of a custom FPGA designed as an emulator on a chip that provided 100% visibility of the DUT without compiling probes. It also offered easier setup time and significantly faster compilation speed. It was designed and commercialized by a French startup named Meta Systems, which was acquired by Mentor Graphics in1996 and later enhanced in the Veloce family.

The other took a radically different approach, called custom-processor-based emulation; it consisted of a vast array of tiny Boolean solvers that processed a DUT database stored in memory. It had the same objectives: 100% native visibility into the DUT, easier setup time and very fast compilation speed. Originally conceived by IBM it was eventually acquired by Cadence, and promoted as the Palladium family.

While the two classes of emulation architectures got most of the attention, the commercial FPGA-based method did not fade away. In one instance, FPGAs were used in an approach grounded on a ReConfigurable Computing (RCC) technology by a startup named Axis. Axis was acquired by Verisity in 2003. In 2005, Cadence purchased Verisity, and soon after shelved the Axis verification technology permanently.

In the other, a company named Emulation Verification Engineering (EVE) adopted the conventional approach driven by the new generation of large capacity FPGAs that allegedly alleviated the bandwidth bottleneck due to the limited number of I/O pins. Synopsys acquired EVE in 2012 and continued the development of its emulator known as ZeBu.

Beyond ICE

New emulation architectures were not the only area of experimentation and innovation. The industry also looked at ways to expand the deployment of the emulator beyond the in-circuit-emulation (ICE) mode. As discussed in part one, ICE is afflicted by drawbacks that limit its use to system validation driven by real traffic generated in the physical target system.

Back then, and even more so today, DUT testing was performed by software test-benches processed by hardware description language (HDL) simulation. By the 1990s, test-benches evolved into complex and powerful software applications, taxing the simulators. The ability to accelerate them became the new frontier.

All emulator vendors attempted to address the issue. Initially, they approached the problem interfacing the DUT inside the emulator to the software testbench written in Verilog via the Verilog programming language interface (PLI). They quickly discovered that the acceleration factor was limited to low single digits, discouraging any further development.

A new approach to write test-benches opened the door to emulation.

Transaction-Based Verification

A verification breakthrough came via the creation of transaction-based test-benches. The motivating factor was the need to generate comprehensive test sequences to contend with the continued increase of SoC complexities.

The concept raised the level of abstraction of the testbench, now written in SystemVerilog, SystemC or C++, and captured the bit-level activity with the DUT into a functional block called transactor, essentially a protocol interface written in RTL code. This allowed designers to focus on creating complex test-benches, and ignore the tedious details. Once created, transactors could be reused. The drawback was that they generated massive amounts of test cycles that slowed down the simulator.

Hardware emulation came to the rescue. By mapping the transactor RTL code onto the emulator and interfacing it to the high-level testbench running on the host computer, an acceleration factor of three to five orders of magnitude was possible. The best implementations run at the same ballpark speed of ICE.

With this approach, verification engineers could build a virtual test environment equivalent to an ICE physical testbench. Replacing the hardware testbench allowed designers to execute the DUT from remote locations, setting the stage for the use of emulation data centers.

Expanded Adoption of the Emulation Technology

Virtualization

The transaction-based methodology still required the creation of the testbench. Mentor addressed this drawback with VirtuaLAB, a software-based testbench controllable from the host computer.

Virtual devices are functionally equivalent to physical peripherals but are not burdened by hardware dependencies, such as reset circuitry, cables, connectors, magnetic shields, nondeterminism and the associated headaches. They are based on tested software intellectual property (IP) that communicates with the protocol-specific RTL design IP embedded in the DUT mapped onto the emulator.

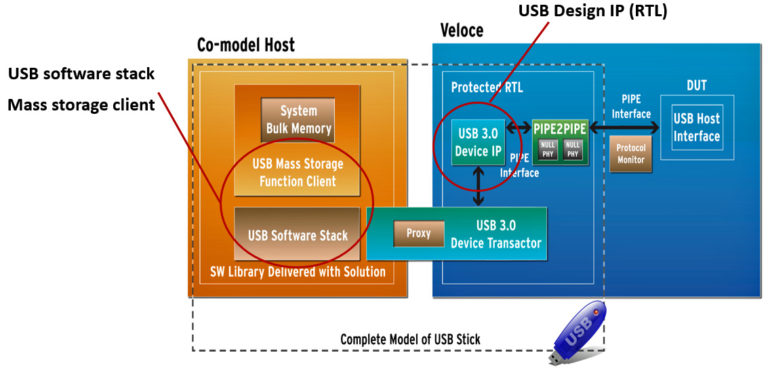

They may include software stacks running on the workstation connected to the emulator via a transaction-based interface (Figure 1). The entire package allows for testing IP at the device level, and DUT verification with realistic software or the device driver itself.

Many designers still use ICE because of the ability to exercise the DUT with real-world traffic. Two out of the three vendors of emulation platforms claim to support ICE, albeit some implementations work better than others. Once a designer experiences transaction-based verification through emulation, his or her verification perspective changes, however. The ability to quickly set up a powerful test environment unfettered by cumbersome ICE hardware means easier and more effective debugging.

New Verification Objectives

In the new millennium, hardware emulation moved into the verification mainstream and is considered the foundation of many verification strategies. This was made possible by supporting several new emulation applications addressing tasks previously deemed inefficient or unachievable.

Today, verification engineers can perform low-power verification of multi-power domain designs by modeling and emulating the switching on/off of power islands and related issues, such as retention, corruption, isolation and level shifting, as defined in the unified power format (UPF) power format file.

They can accelerate power estimation by tracking switching activity at the functional level and directly feeding it to power estimation tools.

A design for test (DFT) application lets test engineers verify that the insertion of DFT circuitry automatically synthesized and inserted at the gate-level netlist does not compromise the functional integrity of the design. This ensures there are no isolated scan chain or vector errors. DFT verification can be completed before tape-out, warranting that any errors can be fixed prior to the creation of masks.

An application to perform hardware/software co-verification or integration confirms that embedded system software works as intended with the underling hardware. It can trace a software bug propagating its effects into the hardware and, conversely, a hardware bug manifesting itself in the software’s behavior.

Another application overcomes the non-deterministic nature of the real-world traffic, one of the main drawbacks of ICE-based emulation. Real-world traffic is not repeatable, making design debug in the traditional ICE mode a frustrating and time-consuming experience. If a particular traffic pattern pinpoints a fault, repeating the scenario is virtually impossible. By capturing the design activity from the initial ICE run in a database, and replaying it without connection to the real world, it is possible to create a repeatable and deterministic environment. If a bug is detected, instead of trying to repeat that traffic pattern on the original ICE setup, the verification team can use the replay database to rerun the test as it did in the first run that caused the problem. This accelerates the time required to unearth and fix bugs.

Emulation applications can accelerate several verification tasks making possible new verification scenarios to tackle new vertical markets. They are offering hardware verification engineers and software development teams many more options to increase their productivity and mitigate verification risks.

FPGA Prototyping in the New Millennium

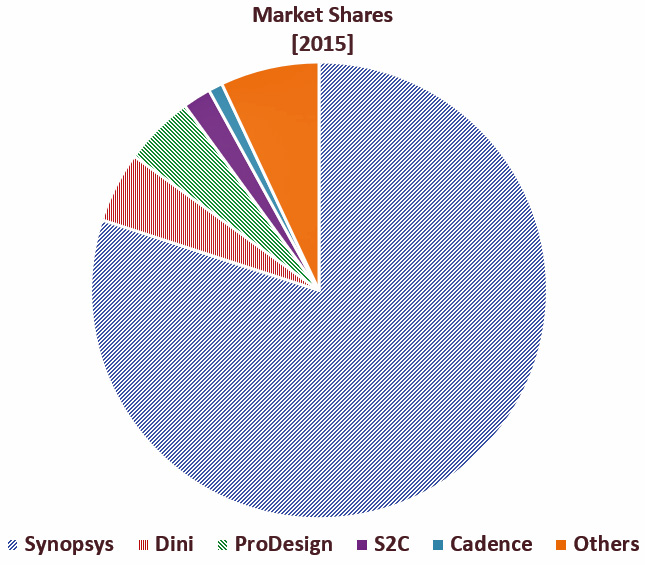

In the new millennium, the commercial FPGA prototyping market recorded a surge in demand and several startups were founded. By the end of the decade, through consolidation, only five players existed: Synopsys, The Dini Group, Pro Design, Cadence, and S2C.

Synopsys acquired Hardi, maker of a product called HAPS, and continues to upgrade the HAPS family with the latest generations of FPGAs.

Cadence started selling a board via an OEM agreement with The Dini Group. In 2017, it terminated the agreement and introduced its own prototyping board.

Currently, The Dini Group, Pro Design and S2C are the only independent vendors.

| Table 1 compares the estimated percentage of designs using hardware emulation and FPGA prototyping in 2000 versus 2015. | ||

|---|---|---|

| Verification Technology | Year 2000 | Year 2015 |

| Simulation at the Block Level | 100% | 100% |

| Emulation | << 1% | > 30% |

| In-house FPGA Prototyping | < 2% | > 30% |

| Commercial FPGA Prototyping | < 10% | > 80% |

Conclusion

In the first decade of the new millennium, industry consolidation left three emulation players: Mentor, Cadence and Synopsys. Each continues to base its emulation technology on the three approaches listed in Table 2.

| Table 2: Each emulation provider uses different approaches to its technology. Source: Authors | |

| EDA Company | Emulation Technology |

| Cadence | Processor-based |

| Mentor | Custom-FPGA based |

| Synopsys | Commercial-FPGA based |

Today, all three develop and sell their own an emulation platform and compete on the market with new generations of emulators.

The FPGA prototyping market also saw consolidation with Synopsys leading the market.

In part-3 of this series, we will look at the future for hardware emulation, as designs get even more complex and engineers identify more use modes for this versatile tool. Check out EEWeb next Tuesday for part 3.

About Lauro Rizzatti (Verification Consultant, Rizzatti LLC)

Dr. Lauro Rizzatti is a verification consultant and industry expert on hardware emulation. Previously, Dr. Rizzatti held positions in management, product marketing, technical marketing and engineering. (www.rizzatti.com)

About Jean-Marie Brunet (Director of Marketing, Mentor Emulation Division, Mentor, a Siemens Business)

Jean-Marie Brunet is the senior marketing director for the Emulation Division at Mentor, a Siemens business. He has served for over 20 years in application engineering, marketing and management roles in the EDA industry, and has held IC design and design management positions at STMicroelectronics, Cadence, and Micron among others. Brunet holds a Master of Science degree in Electrical Engineering from I.S.E.N Electronic Engineering School in Lille, France. (www.mentor.com)