INTRODUCTION

There is no doubt that computers have changed our lives forever. Still, as much as computers outperform humans at complex tasks such as solving complex mathematical equations in almost zero time, they may underperform when solving what humans can do easily — image identification, for instance. Anyone in the world can identify a picture of a cat in no time at all. The most powerful PC in the world may take hours to get the same answer.

The problem belongs to the traditional control-processing-unit (CPU) Von Neuman architecture. Devised to overcome the inflexibility of early computers that were hardwired to perform a single task, the stored-program computer, credited to Von Neuman, gained the flexibility to execute any program at the expense of lower performance.

Limitations of the stored-program computer, compounded by limited data available for analysis and inadequate algorithms to perform the analysis, conspired to delay artificial intelligence (AI), and its sub-classes of machine learning (ML) and deep learning (DL) implementation for decades.

The turning point came around the beginning of this decade when the computational error in the ability to recognize images via DL started to decrease, and in 2015 crossed over the ability of humans, as shown in figure 1. The human error in image recognition is slightly higher than 5%. Today DL is widely successful in image and video recognition.

CONVOLUTIONAL NEURAL NETWORKS

DL is built on convolutional neural networks (CNN), artificial neural networks similar to the brain’s network of neurons. They consist of a huge array of several billions or even trillions of simple arithmetic operators including multipliers and adders that are tightly interconnected.

It is not the vast number of operators that make a CNN complex. Rather, its complexity stems from the way they are layered, arranged, and interconnected. The largest CNN designs include multi-billion ASIC-equivalent gates. Simpler CNN designs start at hundreds of million gates.

CNN designs are data-path oriented with limited control logic. Still, they present significant challenges to designers to implement fast and efficient data transfers between layered arithmetic operators and data memories.

DL designs “learn” what they are supposed to do via a training process that configures the multitude of weights and biases of the multipliers and adders. The more layers, the deeper is the learning. The complexity of the task is exacerbated by training back-propagation algorithms aimed at refining weights and biases.

Once trained and configured, a DL design is deployed in the inference mode. See Figure 2.

CLASSICAL PROGRAMMING VERSUS MACHINE LEARNING

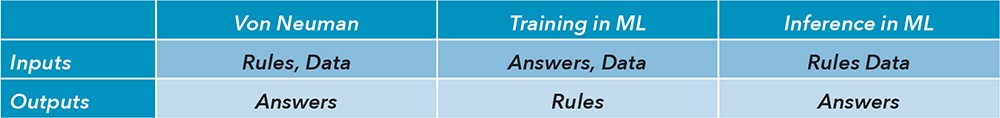

It is interesting to look at the input information fed into and the output information generated from a CPU and compare it to a CNN in training versus a CNN in inference. See Table I below.

Table 1 – This matrix compares in/out information between Von Neuman and DL processing. (Source: Mentor, a Siemens Business)

CNN PROCESSING POWER, COST, ACCURACY

The computational demand to perform learning and inference operations is imposing. DL training calls for intensive processing. While DL training must be performed only once, a trained neural network must perform inference on potentially hundreds of thousands of applications, serving many millions of users making scores of requests to be served quickly in many application scenarios. This massive computational load requires processing resources that scale in performance, power consumption, and size at a price point that can be justified economically.

In general, digital computing can be carried out using floating-point or fixed-point numerical representations. It can also be executed with different levels of accuracy, measured by the number of bits used to hold the data. While CNN learning requires higher accuracy provided by 16-bit or 32-bit floating point math, CNN inference can benefit from 16-bit or 8-bit fixed point, or even lower precision. Several analyses performed in the past few years prove that 8 bits lead to quality of results comparable to those obtained by 16-bit or 32-bit floating point calculation.

A rule of thumb captures the interdependency between precision and silicon requirement. Reducing an arithmetic calculation by 1-bit halves the silicon size and power needed to perform it. There is a lower limit to the precision not to be trespassed without breaking the learned results.

WHERE DEEP LEARNING OCCURS

CNN computation can occur in four spatial locations: in datacenters, at the edge of datacenters, on desktops, and in embedded applications. Depending on whether it is learning or inference, some locations are better than others.

Training in a datacenter requires massive computational throughput to perform large tasks and achieve a high quality of results. On the downside, it consumes more power, occupies a large footprint, and costs orders of magnitude more than edge DL processing.

Conversely, DL computing at the edge is less demanding in processing throughput, but provides shorter latencies, and accommodates constrained power budgets in smaller footprints. DL computing on desktops and in embedded applications shares the profile with edge computing.

Ultimately, execution speed, power consumption, accuracy, size, and cost are driven by the application. Most applications demand lower pricing over lower precision of calculation.

DETERMINISTIC VERSUS STATISTICAL DESIGNS

Unlike a processor design or any other semiconductor design performing a function whose output is rigorous and deterministic, AI/ML/DL designs produce responses that are more or less statistically correct. They implement complex algorithms that process input data and generate responses that typically are correct within a percentage of error. For instance, an image recognition algorithm running on a CNN design may identify a picture of a cat within a margin of error — the smaller the error, the more accurate the design.

This poses a challenge to the design verification team.

HARDWARE EMULATION FOR DL DESIGN VERIFICATION

Currently, there are four distinctive groups of companies developing silicon for AI and, more specifically, for DL acceleration.

The first group includes the established semiconductor companies, such as Intel, AMD, NVIDIA, IBM, Qualcomm and Xilinx. With a few exceptions, most have roots in CPUs and Application Processors development. The exceptions are NVIDIA that leads the AI field with advanced graphics processing units (GPUs), and Xilinx (and Intel) that bet on the success of the field programmable gate arrays (FPGAs), pushing boundaries of the FPGA technology.

Second, several large system/software companies such as Google, Amazon, Microsoft, Facebook and Apple are entering the silicon arena. While they started from scratch with no history of designing silicon, they have deep pockets that help them recruit the talent to fulfil the objective. They design application specific integrated circuits (ASICs) and systems on chips (SoCs) targeting CNN and explore bold new architectures.

Third, several intellectual property (IP) companies are actively developing IP consisting of advanced processing cores to offer huge computational capabilities. Among them are Arm, CEVA, Cadence, Synopsys, Imagination, Achronix, FlexLogic and

many others.

Fourth, a plethora of startups with ambitious goals and substantial funding are populating the field. They include Wave Computing, Graphcore, Cerebras, Habana, Mythic and SambaNova all creating ad-hoc ASICs with some level of programmability.

Regardless of the type of silicon they develop, the designs share characteristics that together impose a unique set of challenges to design verification teams. See Figure 3.

Exhaustive Hardware Verification

As stated earlier, AI designs are among the largest reaching into the two to four billion-equivalent-gates. A critical aspect of these design is the memory access and its bandwidth. Efficient memory accesses make or break a design.

Further, a high-level of computational power and low degree of power consumption are mandatory. A popular metric to measure the above and compare designs is TOPS/watt (Tera-Operations-Per-Second/watt).

All call for thorough and exhaustive hardware verification, supported by high debug productivity.

Comprehensive Software Validation

While the hardware is the foundation of CNN designs, software plays a critical role, adding another dimension to the complexity of CNN designs. Designs are built on sophisticated open-source software harnesses and frameworks created and sponsored by some of the big companies and better-known universities. The software is organized in a stack that includes drivers, OS, firmware, FrameWorks, algorithms, and finally, performance benchmarks, typically MLPerf, DAWNBench. See figure 3 above.

The ultimate goal is to train a CNN before committing to silicon, a task that requires plenty of processing power. Reaching the objective accelerates time-to-market critical in this hyper-competitive market and, at the same time, achieves quality-of-results. The task falls to the design verification/validation team, who can meet the challenge via hardware emulation.

HARDWARE EMULATION REQUIREMENTS FOR CNN VERIFICATION

One of the prominent hardware emulation suppliers, Mentor, a Siemens Business, identified three main requirements necessary to successfully deploy hardware emulation for design verification of CNN accelerators. Requirements are:

- Scalability

CNN accelerators differ when developed for data centers versus edge installations.

In data centers, accelerators must possess high computing power, large memory bandwidth at the expense of large footprint and significant power consumption.

At the edge, low latency, low-power consumption, and low footprint top the list of design attributes.

To succeed in the verification task, an emulator must provide a wide range of capacity, and upward scalability to accommodate the next generations of designs. To be specific, the emulation platform should offer a capacity range from tens of million gates to 10-billion gates.

As designs scale up, the emulation platform ought to scale upward in capacity without compromising its performance, necessary to execute AI frameworks and process MLPerf or DAWNBench benchmarks within the allocated verification schedule.

- Virtualization

For few years now, deploying emulation in virtual mode has been increasing and replacing the in-circuit emulation (ICE) mode due to multiple advantages. Advantages include simpler deployment without hardware dependencies typical of ICE, ease/deterministic design debug, high debug accuracy, ability to perform several verification tasks not possible in ICE (power estimation, low-power verification, DFT verification, hardware/software debug…), and remote access for multiple concurrent users. The virtual mode moves the emulator out of a lab and into a data center making it an enterprise resource.

For Mentor users, advantages are augmented with benefits offered by Veloce® VirtuaLAB that boost the emulation platform productivity by an order of magnitude, enabling more testing on shorter schedules.

Given the critical nature of verifying AI designs and their software stacks, virtualization allows for increasing levels of abstraction and ensures full design visibility and controllability to execute any verification suite in the search for design bugs. It also enables precise measurements of important system behavior parameters.

Virtualization enables the execution of a broad range of AI frameworks and of any performance benchmarks. These tasks would not be possible in ICE mode.

- Determinism

AI silicon is one component of a complex system that includes software and algorithms, as noted earlier. Development and optimization of all three require concurrency of tasks. It is crucial to guarantee that hardware design mapping into an emulator happens exactly the same way for every iteration when any benchmarks, software and algorithms are run on it.

This is possible only by creating a deterministic emulation environment.

Design compilation must complete successfully and quickly avoiding trial and error in order to produce the same results from one compile to the next because compilation must be a deterministic process.

Emulation of frameworks, benchmarks and tests must run in a consistent order because execution must be a deterministic process. Debug must be a deterministic process. Problems found in emulation must be reproduceable, calling for a repeatable debug methodology.

Conclusion

AI/ML/DL designs are posing new challenges to design verification teams. Only hardware emulation can confront and tame those challenges.