Is it time for the industry to devise a nimbler, faster and more efficient methodology?

Source: Semiconductor Engineering

If you wonder how important low power is in chip design today, consider the recent news in the blogosphere reporting the controversy surrounding Qualcomm’s Snapdragon 810 SoC — the company’s first flagship 64-bit chip, which will most likely power the top Android devices released in 2015. The story broke in early December along the lines that the 810 had problems with overheating.

Whether true or not, Samsung elected to replace the Snapdragon 810 with their 7000-series, Exynos, in the just announced Galaxy S6 smartphone.

Could the above saga be avoided? Yes, by using a better power estimation approach in the design and verification phase of the SoC.

Two critical aspects concern dynamic power estimation: average power consumption and peak power consumption. Not closely correlated, they drive chip designers in making several critical decisions in the development process. By evaluating the average power consumption, designers can decide the best die size, select the proper package, choose the battery size and calculate the battery life. Conversely, by assessing the peak power consumption, they can determine the chip reliability, size the power rails for peak power loads, measure performance and evaluate cooling options.

Dynamic power consumption depends heavily on the design activity when in operation. This activity can be triggered by a testbench, software-based or target-system-based, or by the execution of the embedded software.

Creating a testbench to thoroughly verify the entire functionality of a SoC would be a massive undertaking. Fortunately, it can be avoided by booting an operating system, and processing drivers, applications and diagnostics software.

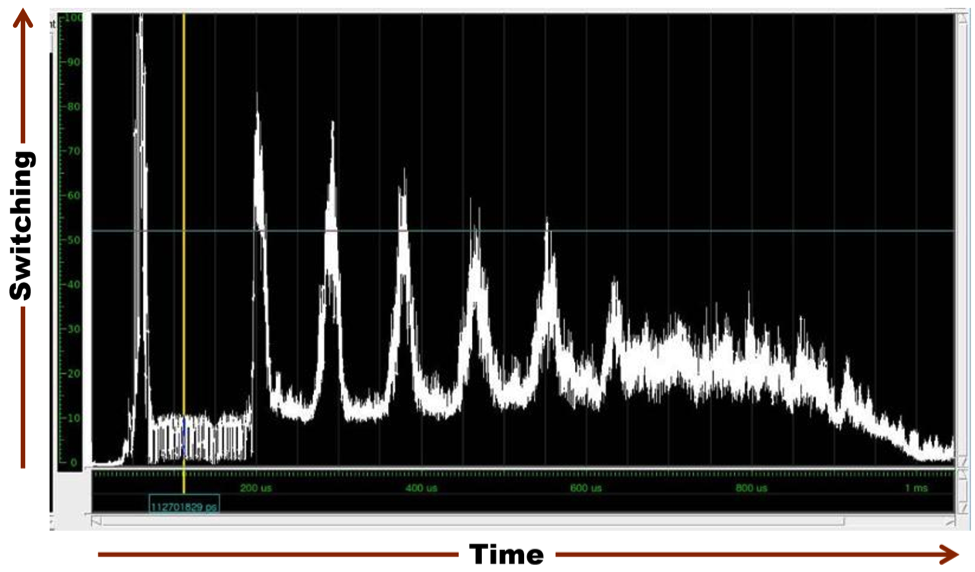

Figure 1 charts power consumption in a SoC over time by booting an OS and running a few applications. It clearly shows that the average power consumption hovers around 20% of the highest peak power consumption.

Figure 1: SoC dynamic power consumption by booting an OS and running applications.

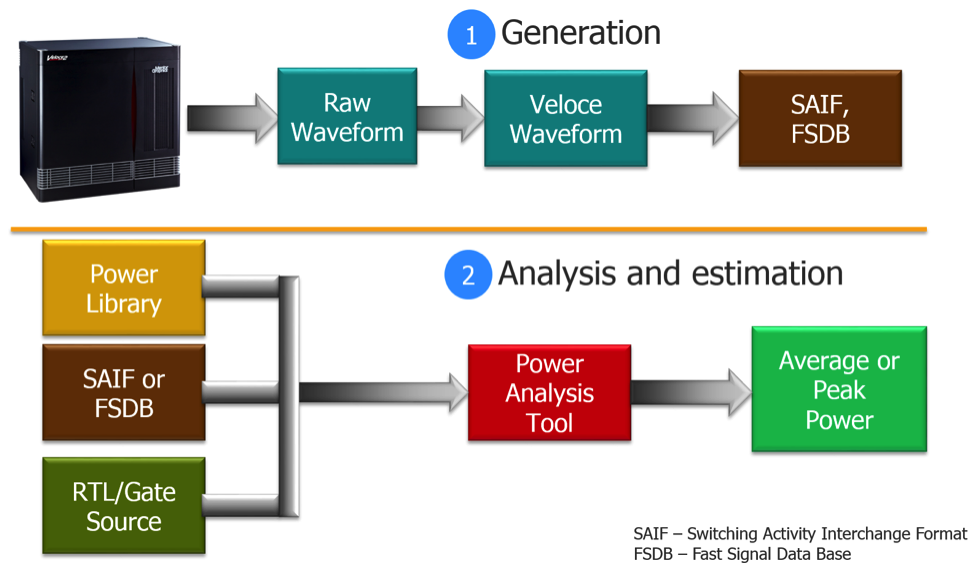

Until now, the approach to estimate dynamic power consumption has been a two-step process. First, an RTL simulator or a hardware emulator records the switching activity of the design in a database. Second, a power estimation tool reads such database and calculates the dynamic power consumption.

For an estimation of average dynamic power consumption a global counter accumulates the switching activity of all signals for the entire verification session in an interchange format (SAIF) file. Conversely, for an accurate estimation of peak dynamic power consumption individual counters record the switching activity of each signal on a cycle-by-cycle basis in a fast signal database (FSDB) file.

It is important to note that the practice of sampling a subset of signals in a limited time window would lead to a totally inaccurate assessment of dynamic power consumption and must be avoided entirely.

But there is a problem with simulators, i.e., their execution speed degrades drastically as design sizes increase. With design sizes at or above 100 million gates, the speed of a simulator may be below 10 cycles-per-second, way too slow for processing embedded software. Only emulators can perform the challenging task in a reasonable amount of time.

Figure 2 represents the two stages in a scenario where the Mentor Graphics Veloce2 emulator generates SAIF and FSDB files.

Figure 2: Two-step dynamic power estimation via the Mentor Graphics Veloce2 emulation platform.

When applied to large, modern SoC designs, the two-step approach is affected by three severe limitations. First, SAIF files, and by far more so FSDB files, are huge and virtually unmanageable. Second, the generation of these files is a long process of many hours. Third, the consumption of the FSDB files by the power analysis tools takes several days or even weeks, an eternity when measured against the development cycle of a modern SoC in a highly competitive market. Combined, the three issues completely defeat the methodology.

Realistic dynamic power estimation can only be achieved by booting operating systems and running real applications in a target environment and recording each and every signal transition for each clock edge. Unfortunately, the two-step process based on the generation of SAIF and FSDB files in an era where SoC designs approach billion-gate capacity is no longer applicable.

Is it time for the industry to explore and devise a new nimbler, faster and more efficient methodology? I’m eager to hear what you have to say.