Source: EE Times

Currently, the most widely-used storage device is the Hard Disk Drive (HDD), but its popularity is rapidly declining.

In an earlier column — Digital Data Storage is Undergoing Mind-Boggling Growth — we considered the mind-boggling growth of electronic data, which exceeded 10,000 exabytes or 10 zettabytes in 2016. By 2020, the volume of data is estimated to surpass 50 zettabytes. It’s worth mentioning that there is only one prefix left, the “yotta,” which was established at the 19th General Conference on Weights and Measures in 1991, before we run out of prefixes.

This follow-up column is co-authored by Ben Whitehead, who is a storage product specialist at Mentor Graphics. In this column we will discuss the evolution of data storage technologies and introduce the two predominant current and alternative storage technologies: the hard disc drive (HDD) and the solid state drive (SSD). Following this introduction, the focus of this column will be the HDD, its functions, and associated design verification methodology. In a future column, we will concentrate on the SSD, concluding with trends in data storage for the foreseeable future.

Introduction

The history of electronic data storage evolved hand-in-hand with that of the computer. One could not exist without the other. After all, a computer needs storage to hold programs and data.

From the perspective of storage, programs and data are two sides of the same coin. They consist of strings of binary numbers that only computers can make sense of. Depending on how they are used, the storage requirements are rather different. When programs and data are in use concurrently, the media supporting them is called “main memory” or “primary memory” or just “memory.” Conversely, when they are preserved for future use, the media supporting them is called “secondary memory” or just “storage.”

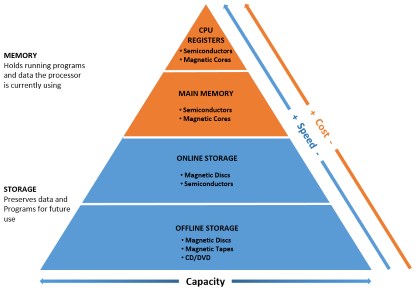

Memory characteristics include fast data storing/retrieving capability, limited capacity, and higher cost compared to storage. On the other hand, storage characteristics comprise significantly larger capacity, but slower data storing/retrieving speed and lower cost than memory. Basically, storage holds far larger amounts of data at lesser cost per byte than memory. Typically, storage is two orders of magnitude less expensive than memory.

Unlike memory, storage can further be classified as online or offline. Figure 1 shows the main characteristics of memory and storage.

The technologies devised for implementing memory and storage that have evolved over time are remarkable examples of human ingenuity. Inventors have exploited mechanical, electromagnetic, electrostatic, electrical, optical, and semiconductor properties. A non-exhaustive list of memory/storage media includes: punched paper cards; punched paper tapes; delay lines (magnetostrictive wires or mercury delay lines); electrostatic memory tubes; charged capacitors; magnetic drums, tapes, cores, and discs; optical discs, and semiconductor chips. Some were very short-lived (e.g., electrostatic memory tubes), while others (e.g., magnetic cores) lasted for a few decades before being retired.

Today, memory is universally implemented using semiconductor chips. By comparison. storage is in the middle of a historical transition from magnetic discs to semiconductors, with the latter rapidly expanding and replacing their magnetic disc counterparts.

Progressively, memory and storage capacity grew from a few bytes to kilobytes, megabytes and gigabytes. Today, the capacity of a storage device — even on a home computer — is often measured in terabytes.

Storage Devices

Storage devices encompass two parts: the media that stores the data and the controller that acts as a “traffic cop,” supervising the flow of binary data in and out of the storage cells. The controller is the brain of a storage device. A poorly designed controller can quickly generate traffic congestion and slow down the computer’s operations.

Hard Disk Drive (HDD)

At the time of this writing, the most popular storage device is the Hard Disk Drive (HDD), but its popularity is rapidly declining. The HDD has been around for three decades or so. Thirty years ago, there were many suppliers of hard drives. Over time, the industry saw massive consolidation that led to a virtual monopoly. Today, three big storage vendors survive: Seagate, Toshiba, and Western Digital, all of whom evolved through acquisitions of dozens of former big players, such as Conner Peripherals, Maxtor, and many others. Emblematic of this trend is the last acquisition of HGST (Hitachi Global Storage Technologies). Regulators split its products into two buckets: the 2.5-inch drives went to Toshiba, while the 3.5-inch drives went to Western Digital.

The three big companies own all the patents, the clean room technology, manufacturing robots, and so on. Basically, they monopolize the HDD industry. One could not acquire another company in the hard disk drive business — not that any still exist — without triggering the involvement of the regulators.

The main reason for this state of affairs is the enormous entry barrier that prevents small players from throwing in their hats. The money and engineering efforts required to create a modern HDD business are massive.

HDD Technology

In an HDD, the media that stores the binary data consists of rotating discs or platters made of glass-coated aluminum alloy with a magnetic material on both sides or surfaces.

Given the nature of the technology, designing an HDD involves several disciplines, including mechanics, acoustics, aeronautics, and electronics. Still, the HDD has not changed that much in the past 10 years or so. The last major enhancement to increase the storage density occurred about seven years ago; this involved the use of perpendicular recording, a rather ingenuous endeavor that posed significant engineering challenges. Heat-Assisted Magnetic Recording (HAMR) promises new gains in density, but has been plagued by delays, and is finally due out in 2018.

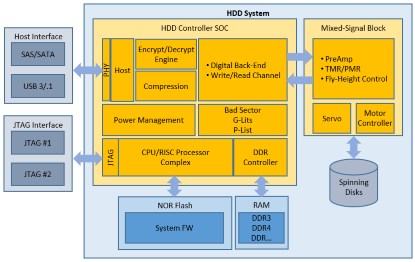

The HDD controller is a jewel of ingenuity. It combines a digital System-on-Chip (SoC) supported by a large amount of firmware that is the brain of the controller along with an analog/digital mixed-signal block that interfaces to the spinning disc (see the block diagram in Figure 2).

The SoC includes a CPU/RISC processor group. ARM cores seem to be the most popular, but Tensilica and ARC (Synopsys) cores are also used. It comprises the host interface, built on either SATA/SAS or USB 3.1 standards, and the digital interface to the analog/digital mixed-signal block.

The SoC also encompasses a few additional functions, such as an encryption/decryption engine, a compression engine, power management, a DDR memory controller, and a mechanism to track bad sectors.

The mixed-signal functional block is the most critical element in an HDD. A conventional motor controller drives the spinning disc, while a servo operates the arm and reading/writing head. The Fly Height Control encompasses the aeronautics used to fly the head only a few nanometers from the surface of the media without touching it.

Recently, an HDD designer drew an analogy to explain the level of precision required. It is like a Boeing 747 flying 1/32nd of an inch (for non-US reader, that’s about 0.8 mm) off the ground, without ever touching the ground, at nearly the speed of sound. Statistically, the head touches the media every 20 seconds or so, whose friction causes a thermal event with potential loss of data. The loss is taken care by a “go-around” of the head to re-read or re-write the data at that point.

The level of analog signal processing (ASP) required is increasing constantly with time. Signal-to-noise ratios continue to diminish, and algorithms like Partial Response, Maximum Likelihood (PRML) are used to reconstruct a bitstream. This is performed over a long stream of bits with elaborate mathematics in a short processing window.

TMR, or Tunnel Magneto-Resistance, and now PMR, for Perpendicular Magnetic Recording, allowed massive increases in bit-density.

The firmware controls all HDD operations. It is the volume and complexity of the firmware that sets an HDD design apart from most other embedded systems.

HDD design is anything but simple. The most complex and challenging blocks are developed by third-party intellectual property (IP) vendors, chiefly Marvell and Broadcom. They sell the IP blocks to the three remaining HDD manufactures — Seagate, Toshiba, and Western Digital — who integrate them into their HDD systems.

HDD Verification and Validation

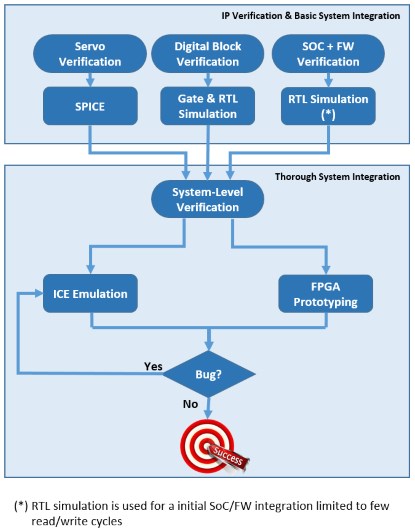

Verifying an HDD controller is a daunting task. The nature of its composition that combines analog and mixed-signal IP with traditional digital SoC and robust firmware necessitates a hierarchical, multi-stage methodology (Figure 3).

The approach starts at the IP level performed by the IP vendors. The servo is verified via a SPICE simulator, although the complexity of the analog functions makes it difficult to accurately model its behavior in an overall system. As discussed later, they are tested at the system-level via in-circuit-emulation (ICE).

The verification of the hardware portion of the digital SoC is straightforward and like that of any other embedded design. Gate-level and register transfer level (RTL) simulation are used at the block level, driven by functional coverage until reaching virtually 100% coverage. It should be noted that, lately, formal verification methodologies are deployed as well.

Full SoC-level verification is performed in the context of system-level integration with the firmware.

Initially, RTL simulation is used at the system level, but the lack of performance power of RTL simulators limits the verification to basic and simple operations — at most, one hundred or so read/write cycles between adjacent tracks. At this stage, the servo and analog functions are replaced with a simple model.

Thorough system-level integration and firmware validation requires the execution power of hardware-assisted verification engines in the form of hardware emulation and FPGA prototyping — two critical items in the verification team’s toolbox.

Hardware emulation provides the performance necessary to carry out vast amounts of clock cycles — numbered in the hundreds of millions or even billions — required for firmware execution. In this regard, FPGA prototyping is even more powerful in terms of processing clock cycles per second, but FPGA prototyping does not provide the debugging capabilities essential for tracing bugs in the hardware and firmware.

As mentioned earlier, the firmware is an integral part of the whole system, and changes to the firmware are considered at the same level as changes to the hardware. In fact, and contrary to conventional wisdom, engineering teams sometimes opt to fix a firmware bug by changing the affected hardware to avoid the risk of altering the operations of the HDD system.

FPGA prototyping and emulation coexist in the validation and verification methodology. FPGA prototyping is used for system validation, while emulation is used for tracking-down bugs.

In-Circuit-Emulation for HDD Verification and Validation

Today, hardware emulation can be used in one of two broad modes of deployment. In the traditional ICE mode, the design-under-test (DUT) mapped inside the emulator is physically connected to the real target system in place of the to-be-taped-out silicon chip. The physical target system sends stimuli and receives responses from the DUT. In a modern virtual mode — devised and perfected over the past decade or so — the DUT mapped inside the emulator is connected to a soft-model of a target system via a transactional interface that sends stimuli and receives responses from the DUT.

The difficulty in accurately modeling the analog portions of the HDD design favored the deployment of the emulation system in ICE mode. Verification engineers assemble all the HDD pieces, hard IP, and soft models for the SoC and debug the controller at the system level.

It should be noted that, as verification engineers move from the individual component to a full system, with multiple hardware components plus interacting with firmware, complexity grows exponentially. It is not uncommon to discover unforeseen sequences that only manifest themselves at the system-level as classic “corner-case” bugs.

Issues that must be addressed in verification include:

- Testing all host interface standards (SAS/SATA, USB 3.1).

- Security (FIPS) certification with third-party IP.

- Encrypted third-party IP debug at the system level.

- Power constraints.

A dilemma arises: if the IP were to be verified with every possible combination of the system configurations it would go into, the verification cost would be prohibitive and testing would take too long. Since HDD schedules are aggressive, companies are willing to take short cuts, get imperfect IP blocks, integrate them into the system, and then find bugs that are related to each specific system configuration. Once fixed, they ship the product. It’s an economic trade-off that works… most of the time.

While the HDD is moving away from the leading position it enjoyed for two decades to be replaced by the SSD in everything from laptops to data centers, it will remain the leader in inexpensive, large-capacity devices for decades to come. The number of innovations and changes to the HDD technology is rather limited, making iterative changes relatively low-risk.

Final Considerations

Will the HDD industry switch the use of hardware emulation from ICE to virtual and gain the benefits that come with this approach — multi-use to remote access, essential attributes to deploy the emulator in a data center? We predict the answer is “no.”

Engineers have been comfortable using the ICE approach for many years. They’ve gained invaluable experience, the approach works well, and the risks to fail associated with a change in verification methodology are too high.

As it happens, however, emulation deployed in virtual mode fits perfectly in the verification and validation methodology of SSD designs, which will be the topic of our next article.

Dr. Lauro Rizzatti is a verification consultant and industry expert on hardware emulation (www.rizzatti.com). Previously, Dr. Rizzatti held positions in management, product marketing, technical marketing, and engineering. He can be reached at lauro@rizzatti.com.

Ben Whitehead has been in the storage industry developing verification solutions for nearly 20 years. He has been with LSI Logic and Seagate, and has most recently managed SSD controller teams at Micron. His leadership with verification methodologies in storage technologies led him to his current position as a Storage Product Specialist at Mentor Graphics.